Responsible AI for UK Marketing: Why Your Agency Needs a Framework Now

Responsible AI for UK Marketing: Why Your Agency Needs a Framework Now

8 min read · Last updated 31 January 2026

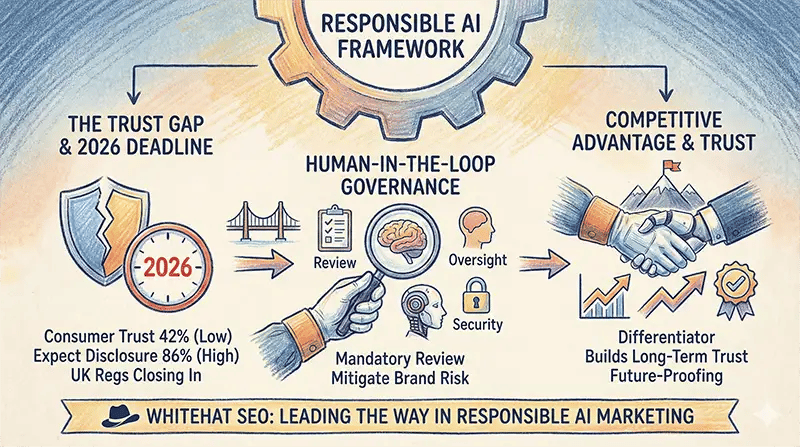

UK marketing teams should adopt AI responsibly by implementing governance frameworks before anticipated 2026 legislation makes it mandatory. With only 42% of UK consumers trusting AI systems and 86% expecting disclosure, responsible AI adoption isn't a compliance burden—it's a competitive differentiator that builds trust whilst competitors scramble to catch up.

This isn't a balanced explainer about the pros and cons of AI governance. Whitehat SEO takes a clear position: the current regulatory window in the UK is an opportunity to establish responsible AI practices on your terms, not an invitation to delay. We've worked with B2B marketing teams navigating this transition, and the evidence is unambiguous—agencies that lead on responsible AI will win the trust race.

The UK's Regulatory Window Closes in 2026

The UK has deliberately chosen a different path from the EU's prescriptive AI Act, adopting a principles-based approach that empowers existing regulators rather than creating new AI-specific laws. The government's five core principles—safety, transparency, fairness, accountability, and contestability—apply across all sectors but are interpreted contextually by bodies like the ICO, FCA, and ASA.

This matters because it means UK marketing agencies currently have flexibility that EU competitors don't enjoy. No blanket disclosure requirements exist for AI-generated content in UK advertising. No mandatory risk classifications. No €35 million penalty regime—yet. But this window is closing. Secretary of State Liz Kendall indicated in December 2025 that the government is actively considering AI legislation, likely focusing on specific high-risk areas rather than a comprehensive Act.

The smart move isn't to exploit this window by delaying governance—it's to establish responsible AI practices now, while you control the terms. Whitehat SEO's AI governance consulting helps B2B teams build frameworks that satisfy current best practice and will adapt smoothly when legislation arrives. Companies that wait until compliance is mandatory will face rushed implementations, talent shortages, and higher costs.

The Trust Gap Your Competitors Are Ignoring

KPMG's 2025 survey of 48,000 people across 47 countries places the UK in the bottom third for AI trust—only 42% of the UK public are willing to trust AI systems. More critically for B2B marketers, 72% of consumers are unsure whether online content can be trusted due to potential AI generation, and 80% believe AI regulation is required.

The transparency expectation is stark and non-negotiable: YouGov research shows 86% of Britons believe it's important to disclose AI usage, whilst Forrester's 2024 data confirms 75%+ agree companies should disclose AI use in customer interactions. Yet here's the gap—only 25% of consumers think they can recognise AI-generated content. They can't tell, but they expect to be told.

This creates a trust arbitrage opportunity. Agencies that proactively disclose AI usage position transparency as a trust signal, not a confession. Those that don't disclose face a different risk: 52% of consumers report reduced engagement when they suspect undisclosed AI content. In B2B marketing, where relationship trust is paramount, this gap becomes a chasm.

Generational patterns matter for B2B targeting too. While 56% of 18-34 year-olds show interest in AI, older decision-makers—often the budget holders in B2B purchases—are more likely to disengage from brands posting AI content they perceive as inauthentic. Whitehat's view: B2B marketers should default to disclosure in all contexts. The upside of proactive transparency far outweighs any perceived sophistication cost.

How the UK Differs from EU and US Approaches

Understanding the regulatory divergence is essential for UK businesses with international operations or clients. The three major markets have taken fundamentally different approaches, and this creates both complexity and opportunity for agencies that understand the landscape.

| Aspect | UK | EU | US |

|---|---|---|---|

| Approach | Principles-based, context-specific | Risk-based, prescriptive | Fragmented, sector-specific |

| Status | No comprehensive law (yet) | In force since August 2024 | 1,000+ state bills pending |

| Deepfake disclosure | No blanket requirement | Mandatory | Varies by state |

| Penalties | Via existing regulators | Up to €35M or 7% revenue | State-dependent |

| New regulator | No—uses existing bodies | EU AI Office + national authorities | No federal AI regulator |

The critical nuance for UK businesses: the EU AI Act has extraterritorial reach. If your AI system's output is used within EU territory or processes data of EU individuals, you're subject to the Act regardless of where you're headquartered. This means many UK agencies face dual-track compliance whether they like it or not.

The UK's position isn't about having fewer rules—it's about having different rules, interpreted through existing regulatory powers. The ICO positions itself as a "de facto AI regulator" given data protection's central role in AI systems. The ASA has confirmed that advertisers cannot abdicate responsibility for creative content regardless of whether AI generated it. The principles-based approach doesn't mean principle-free.

What Responsible AI Means for Marketing Teams Specifically

Generic AI ethics frameworks don't translate well to marketing operations. The ISBA/IPA 12 Guiding Principles for Generative AI in Advertising provide UK-specific, industry-relevant guidance that marketing teams should adopt as baseline standards. The core principles most relevant to B2B marketing: AI should not undermine public trust in advertising, appropriate human oversight must be maintained, and transparency is required where AI features prominently and is unlikely to be obvious to consumers.

The DMA's position captures the emerging consensus that Whitehat SEO endorses: "The human-AI team is our best future, with AI operating as a tool that humans use to assist and enhance our own abilities... we must never forget the people AI is intended to serve." This isn't anti-AI sentiment—it's recognition that AI without human oversight creates brand risk, quality drift, and trust erosion.

GDPR creates specific obligations for AI-powered marketing. Data Protection Impact Assessments are mandatory when using systematic profiling with significant effects or deploying new technologies that may result in high risk. For AI marketing tools, DPIAs must address algorithmic bias, accuracy, explainability limitations, and third-party vendor risks. Article 22 automated decision-making provisions typically don't apply to marketing personalisation, but the ICO warns there could be situations in which marketing may have a significant effect—particularly for vulnerable audiences.

The B2B Marketing Agency Report 2025 found 100% of the 83 agencies surveyed use AI to some capacity. Yet the KPMG study reveals only 27% of UK workers have AI education or training, 39% have uploaded company information into public AI tools, and 58% have relied on AI output without evaluating accuracy. This gap between adoption and governance is where brand damage happens.

Building Your AI Governance Framework

Proportionate governance is key—the scale of governance should match the risk, not the organisation size. A marketing team using AI for content ideation needs less formal governance than one deploying AI for automated customer decisions affecting pricing or access to services. Whitehat SEO's AI policy template provides a starting point that scales with your needs.

The NIST AI Risk Management Framework provides the most accessible starting point for marketing teams. It's free, flexible, and doesn't require certification. The four core functions—GOVERN (establish risk management culture), MAP (contextualise AI systems), MEASURE (assess risks quantitatively), and MANAGE (treat and monitor risks)—apply directly to marketing operations without extensive adaptation.

For agencies where AI is core to their offering, ISO/IEC 42001 represents the world's first certifiable AI management system standard. BSI CEO Susan Taylor Martin notes: "For AI to be a powerful force for good, trust is critical. The publication of the first international AI management system standard is an important step in empowering organizations to responsibly manage the technology." Certification provides client-facing credibility that differentiates in competitive pitches.

Practical Implementation Roadmap

Phase 1: Foundation (Month 1-2) — Audit current AI tool usage across the organisation. Assign an AI Governance Lead (can be part-time for SMEs). Draft an AI Acceptable Use Policy covering approved tools, data classification, and human review requirements. Conduct initial risk assessment of existing tools.

Phase 2: Governance Structure (Month 2-3) — Establish approval workflow for new AI tools. Create vendor due diligence checklist covering security certifications, data handling, bias testing, and contractual protections. Develop training programme covering AI capabilities, limitations, and verification requirements. Update client contracts and SOWs for AI disclosure.

Phase 3: Risk Management (Month 3-4) — Conduct DPIAs for high-risk AI applications. Implement monitoring and review processes. Create incident response procedures. Document AI inventory with purposes, risks, and owners.

Phase 4: Continuous Improvement (Ongoing) — Quarterly policy reviews are essential given the pace of regulatory change. Regular training updates. Monitor ICO, ASA, and government announcements. Annual governance audit. This isn't a set-and-forget exercise—AI governance requires ongoing attention.

Whitehat's Responsible AI Principles

We're sharing the principles that guide Whitehat SEO's own AI usage, not because we think they're perfect, but because we believe agencies should be transparent about their approach. These principles inform our AI consultancy work with clients.

1. Disclosure is non-negotiable. We tell clients when AI has been involved in our work, even when there's no legal requirement to do so. Transparency builds trust; concealment destroys it. This applies to content creation, data analysis, and any other deliverable where AI has played a meaningful role.

2. Human-in-the-loop is mandatory, not optional. Every piece of AI-generated content receives human review before delivery. Every AI-driven recommendation gets human validation. The productivity argument for removing human oversight is a false economy—the reputational cost of a single error exceeds the labour savings of hundreds of successful outputs.

3. The regulatory window is an opportunity to lead, not a licence to delay. UK businesses that establish responsible AI practices now will be ready when legislation arrives. Those that treat the current flexibility as permission to ignore governance will face rushed compliance, higher costs, and client trust deficits.

4. Responsible AI is a competitive advantage, not a compliance burden. The DMA research showing 87% of UK business leaders expect responsible AI to provide competitive advantage isn't aspirational—it's predictive. Clients increasingly ask about AI governance in RFPs. Agencies without credible answers will lose to those with frameworks.

5. Agencies must lead on governance or accept commoditisation. If 100% of agencies use AI but only a fraction govern it responsibly, the ungoverned majority compete solely on price. The governed minority compete on trust. This isn't just ethics—it's positioning strategy.

Frequently Asked Questions

Does the EU AI Act apply to UK businesses?

Yes, if you serve EU customers. The EU AI Act has extraterritorial reach—it applies to AI systems whose output is used within EU territory. UK businesses serving EU markets face dual compliance requirements: UK principles domestically plus EU AI Act obligations for EU-facing operations.

Do I need to disclose AI-generated content in UK marketing?

No blanket UK legal requirement exists yet, but disclosure is commercially essential. YouGov research shows 86% of Britons expect AI usage disclosure, and 52% report reduced engagement when they suspect undisclosed AI content. The ASA has confirmed advertisers cannot abdicate responsibility for AI-generated creative.

What AI governance framework should UK marketers use?

The NIST AI Risk Management Framework provides the most accessible starting point for marketing teams. It's free, flexible, and doesn't require certification. For agencies where AI is central to their offering, ISO/IEC 42001 provides a certifiable standard to demonstrate responsible AI practices to clients.

When will the UK introduce AI legislation?

The UK government has signalled AI legislation is expected in 2026. Secretary of State Liz Kendall indicated the approach will focus on specific areas rather than a comprehensive EU-style Act. Businesses should prepare now—the principles-based approach means regulators like the ICO already have enforcement powers.

Is a DPIA required for AI marketing tools?

Yes, in most cases. Data Protection Impact Assessments are mandatory under UK GDPR when using systematic profiling with significant effects or deploying new technologies that may result in high risk. AI-powered personalisation, lead scoring, and automated segmentation typically trigger DPIA requirements.

Key Takeaways

- The UK's regulatory window closes in 2026 — Establish AI governance now while you control the terms, not when compliance is mandatory and everyone's scrambling.

- Consumer trust in AI sits at just 42% — With 86% expecting disclosure, proactive transparency is a competitive advantage, not a confession.

- UK businesses serving EU customers face dual compliance — The EU AI Act's extraterritorial reach means many UK agencies need to comply regardless of headquarters location.

- 100% of agencies use AI but few govern it — This gap between adoption and governance is where brand damage happens and where differentiation opportunities exist.

- Human-in-the-loop isn't optional for B2B marketing — The productivity savings from removing human oversight never compensate for the reputational cost of errors.

Build Your AI Governance Framework

Whitehat SEO helps B2B marketing teams implement responsible AI practices that build client trust and prepare for regulatory change. Our AI consultancy includes policy development, DPIA support, and governance training.

Book an AI Governance ConsultationNot ready for a consultation? Download our free AI policy template to get started.

References

- YouGov UK Media Attitudes to AI Report (2024) — Consumer trust and disclosure expectation data

- UK Government Pro-Innovation AI Regulation White Paper (2024) — Five principles framework and regulatory approach

- ICO Automated Decision-Making and Profiling Guidance (2024) — GDPR requirements for AI marketing tools

- ISBA/IPA 12 Guiding Principles for Generative AI in Advertising (2023) — UK advertising industry self-regulatory framework

- DMA Responsible AI Innovation Position (2024) — Marketing industry perspective on human-AI collaboration

- ASA AI in Advertising Guidance (2024) — Advertiser responsibility for AI-generated content

- NIST AI Risk Management Framework (2023) — Practical governance framework for organisations

- ISO/IEC 42001:2023 AI Management Systems (2023) — International certifiable AI governance standard

- BSI Responsible AI Management Guidance (2024) — UK standards body perspective on AI governance