UK AI POLICY: A TEMPLATE FOR A NEW DIRECTION

AI & REGULATION

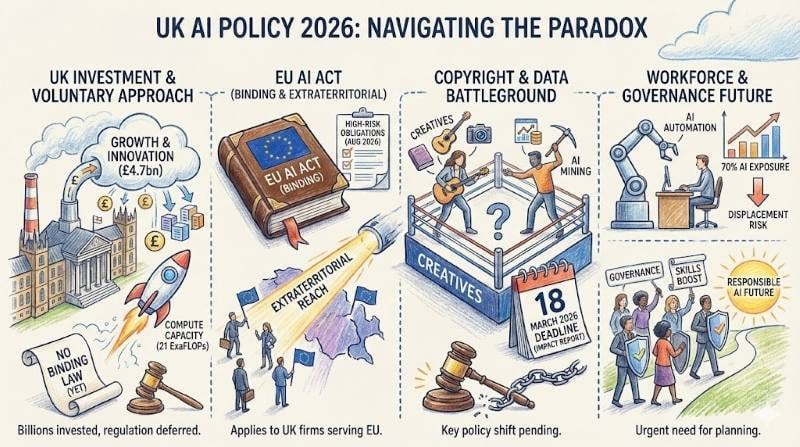

The UK has staked its AI future on growth, investment, and voluntary cooperation—not binding law. As of February 2026, no AI-specific legislation exists in the United Kingdom, despite repeated government pledges. Labour has largely continued and even accelerated the Conservative-era pro-innovation approach, pouring billions into compute infrastructure and public-sector adoption whilst deferring the hard work of regulation.

UK AI Policy: What Your Business Must Know Right Now

The UK has invested billions in AI infrastructure whilst delaying binding legislation. Here's what that paradox means for your compliance strategy, your marketing operations, and your competitive position.

The result is a striking gap between the UK's ambitious AI adoption agenda and the EU's comprehensive, legally binding AI Act—a gap that creates both competitive opportunity and compliance complexity for businesses operating across both markets.

This matters because the UK is Europe's largest AI economy. According to UK government data, 185 tech startups are now valued over $1 billion, and UK AI firms raised £4.7 billion in 2025 alone. Yet roughly 70% of UK workers hold jobs with significant AI task exposure, and early evidence suggests measurable employment displacement is already underway. For businesses using AI in marketing operations, understanding this regulatory landscape is essential for responsible deployment.

The UK Has No AI Legislation—And May Not Get One Soon

The centrepiece absence in UK AI policy is legislation. The July 2024 King's Speech promised a bill to impose requirements on developers of the "most powerful" AI models and make voluntary safety commitments legally binding. That bill never materialised.

In June 2025, the government pivoted from a short, focused bill to a more comprehensive measure in the next parliamentary session. By December 2025, Technology Secretary Liz Kendall told the Science, Innovation and Technology Committee she envisioned addressing AI harms through targeted, sector-specific action "rather than a big all-encompassing bill."

The practical reality: Multiple law firms—including Osborne Clarke, Taylor Wessing, and the IAPP—now assess that a dedicated AI bill is unlikely before the second half of 2026 at the earliest. Even if included in a May 2026 King's Speech, practical legislative impact would not arrive until late 2026 or 2027.

The Data (Use and Access) Act 2025: What Changed

The most significant enacted legislation with AI relevance is the Data (Use and Access) Act 2025, which received Royal Assent on 19 June 2025. It doesn't regulate AI directly but reshapes the legal terrain in two critical ways:

1. Relaxed automated decision-making rules: Organisations can now use any lawful basis for solely automated decisions with legal or similarly significant effects, provided they implement safeguards including transparency, meaningful human intervention, and the right to contest. Key provisions commenced on 5 February 2026.

2. Copyright deadline: The Act sets a statutory deadline of 18 March 2026 for the government to publish an economic impact assessment and report on the use of copyright works in AI development—the most consequential near-term policy milestone.

For businesses managing customer data through platforms like HubSpot, these changes have direct implications for how you configure automated workflows and marketing automation systems.

Labour's AI Strategy: Billions Invested, Regulation Deferred

The Labour government's AI strategy rests on the AI Opportunities Action Plan, authored by Matt Clifford and published in January 2025. All 50 recommendations were accepted. A "One Year On" progress report published on 29 January 2026 reported 38 of 50 actions met—a 76% completion rate tracked on a public dashboard.

The Investment Numbers

The 2025 Spending Review allocated £2 billion for AI through 2029/30, including:

- Approximately £1 billion for sovereign compute capacity

- £750 million for a next-generation supercomputer in Edinburgh

- Up to £500 million for a UK Sovereign AI Unit

- £240 million for the AI Security Institute

UK AI compute capacity surged from 2 ExaFLOPs in 2024 to 21 ExaFLOPs in 2025, targeting 420 ExaFLOPs by 2030. Five AI Growth Zones have been designated across Great Britain—Culham, Cambois, North Wales, South Wales, and Lanarkshire—generating £28.2 billion in private investment and over 15,000 jobs.

£4.7bn

UK AI investment raised in 2025

21

ExaFLOPs compute capacity (10× growth)

2.4m

NHS chest X-rays now AI-assisted

The Philosophical Shift

Despite manifesto commitments to binding regulation, Labour has pivoted decisively toward growth. The February 2025 Paris AI Action Summit saw the UK and US as the only countries refusing to sign the international AI declaration. The AI Safety Institute was rebranded to the "AI Security Institute" in February 2025, explicitly excluding bias and freedom of speech from its remit to focus on national security threats. The October 2025 "Blueprint for AI Regulation" introduced AI Growth Labs—regulatory sandboxes where rules are temporarily relaxed under supervision.

Copyright Reform: The Most Contentious Battleground

The UK copyright debate has become the most contentious domain of AI policy. The government's initially preferred approach—expanding text and data mining exceptions to cover commercial AI training, with an opt-out mechanism for rightsholders—has been effectively abandoned following overwhelming opposition.

A December 2024 consultation received over 11,500 responses. The results were unambiguous: 88% supported mandatory licensing (Option 1), whilst only 3% backed the government's preferred opt-out model (Option 3). Over 1,000 music artists created a "silent album" in protest. High-profile figures including Paul McCartney, Kate Bush, Elton John, and Hans Zimmer spoke out. The Writers' Guild called the proposal "industrial-scale theft."

What the Government Said

In January 2026, Culture Secretary Lisa Nandy acknowledged before the House of Lords Communications and Digital Committee that "it was a mistake to start with a preferred model, the opt-out model." Technology Secretary Liz Kendall stated that creative industries' concerns about reward and control must be "at the heart of the way forward."

The Getty Images Judgment: A Warning Sign

The landmark Getty Images v Stability AI judgment in November 2025—the first UK court ruling on AI copyright—exposed a critical gap. The High Court found that Stable Diffusion's model weights did not "store, reproduce, or contain" Getty's images, so the model was not an "infringing copy." Getty abandoned its primary infringement claim after accepting it could not prove training occurred in the UK.

The case underscored that current law is inadequate for addressing AI training conducted overseas. Getty has been granted permission to appeal. For businesses creating content—whether through traditional means or AI-assisted tools—understanding these evolving copyright boundaries is essential. Whitehat's approach to AI usage in marketing prioritises transparency and human oversight precisely because of this legal uncertainty.

UK vs EU: The Dual Compliance Reality

The philosophical gap between UK and EU approaches is now a structural feature of the regulatory landscape. The EU AI Act—the world's first comprehensive binding AI law—entered into force in August 2024 with phased implementation:

- 2 February 2025: Prohibitions on unacceptable-risk AI and AI literacy requirements

- 2 August 2025: Rules for general-purpose AI models

- 2 August 2026: Majority of high-risk system obligations (though the Commission's "Digital Omnibus" proposal may delay some deadlines)

| Aspect | UK Approach | EU AI Act |

|---|---|---|

| Risk classification | None (principles-based) | Mandatory 4-tier system |

| Central regulator | No (sector regulators) | Yes (AI Office) |

| Conformity assessment | Voluntary | Mandatory for high-risk |

| Maximum penalties | Existing regulator powers | €35m or 7% global turnover |

| Data adequacy | Renewed until 2031 | No AI-specific mechanism |

For UK businesses, the practical consequence is a dual compliance burden. Any AI system whose outputs are used in the EU falls under the EU AI Act's extraterritorial reach, regardless of where the provider is based. UK firms must simultaneously satisfy UK principles-based guidance and EU legal requirements, typically defaulting to the stricter EU standard—the so-called "Brussels Effect."

One significant relief came in December 2025, when the European Commission renewed UK data adequacy until 2031. However, no AI-specific mutual recognition arrangement exists. Businesses using integrated platforms like HubSpot CRM should ensure their data governance configurations reflect both UK GDPR and potential EU requirements.

Corporate AI Governance: What's Required Now

The absence of binding AI legislation does not mean the absence of obligation. UK businesses face a web of existing requirements that apply to AI, and the direction of travel points firmly toward more structured governance.

The ICO Is Already Enforcing

The Information Commissioner's Office is the most active regulatory force. Its December 2024 generative AI consultation outcomes established that legitimate interests can support web-scraping for AI training, but organisations must assess alternatives like data licensing, define purposes specifically (not just "training AI models"), and conduct broad harm assessments.

A statutory code of practice on AI and automated decision-making—mandated by the Data (Use and Access) Act—is expected during 2026 and will represent the closest thing to binding AI-specific regulation. The ICO's January 2026 report on agentic AI confirmed that agentic systems remain fully subject to UK GDPR, with particular attention to decisions producing legal or similarly significant effects.

Best-Practice Governance Requirements

Best-practice corporate AI governance in the UK now requires, at minimum:

- Comprehensive AI inventory and risk classification of all systems in use, aligned to both UK principles and EU AI Act categories

- Board-level accountability with designated AI oversight (fewer than 25% of companies currently have board-approved AI policies)

- Meaningful human oversight of automated decisions, with reviewers who can genuinely challenge and override outcomes

- Data governance foundations—42% of UK companies abandoned AI initiatives in 2025 due to inadequate data preparation

- Cross-jurisdictional compliance frameworks meeting the stricter of UK and EU requirements

ISO/IEC 42001:2023 has emerged as the primary certifiable AI management system standard. BSI became the first certification body accredited by UKAS to certify against it, with organisations like Darktrace achieving certification in July 2025. For organisations operating in both UK and EU markets, ISO 42001 offers a practical framework for implementing EU AI Act compliance in a "repeatable and auditable" way. If you're considering how to structure AI governance alongside your marketing technology stack, our guide to AI ethics consultants outlines the key questions to address.

The Workforce Impact: Policy Lags Behind Reality

The labour market data paints an increasingly urgent picture. An estimated 70% of UK workers hold occupations containing tasks AI could perform or enhance—higher than the US at approximately 60%, reflecting the UK's service-heavy economy.

A King's College London study published in October 2025, analysing millions of job postings and LinkedIn profiles, found that firms with highly AI-exposed workforces reduced total employment by 4.5% on average, with junior positions falling 5.8%. High-salary occupations saw a 34.2% decline in job postings.

The Warning Signs

McKinsey's July 2025 UK report found job ads in high-AI-exposure roles declined 38% since May 2022, compared to 21% for low-exposure roles.

ONS data shows AI adoption by businesses reached 23% by September 2025, up from just 9% two years earlier—yet only 21% of workers feel confident using AI.

Digital-sector employment dropped for the first time in a decade in 2024, with 16–24-year-olds in computer programming roles falling 44% in a single year.

The government's response centres on the AI Skills Boost programme, which delivered over 1 million free AI courses since its June 2025 launch and now targets 10 million workers by 2030. DSIT established an AI and Future of Work Unit in January 2026, a cross-government body tasked with monitoring AI's labour market impacts—but its mandate is to "boost economic growth" alongside supporting workers. The growth-first philosophy remains dominant.

Key Dates: What Businesses Should Prepare For

The UK's AI regulatory landscape will shift meaningfully over the next 18 months, even if comprehensive legislation remains elusive. Track these milestones closely:

18 March 2026

The most important near-term date: government must publish its economic impact assessment and copyright report, setting the direction for AI training and transparency legislation.

May 2026 (Possible)

A King's Speech could announce an AI bill, but multiple analysts consider this unlikely. If announced, any bill would take months to progress through Parliament.

2 August 2026

EU AI Act high-risk system obligations come into force, including conformity assessments, technical documentation, risk management, and transparency rules. UK businesses serving EU customers must be prepared.

During 2026

The ICO's statutory code of practice on AI and automated decision-making is expected, establishing legally backed standards. The MHRA's National Commission will publish AI in healthcare recommendations. HM Treasury is expected to designate its first Critical Third Parties, potentially capturing major AI service providers.

What This Means for Your Business

The UK's AI policy in 2025–2026 is defined by a paradox: extraordinary investment ambition paired with regulatory hesitation. The government has delivered impressively on infrastructure—compute capacity grew tenfold in a single year, billions in private investment flowed in, and public-sector AI adoption accelerated. But the promised binding framework remains absent, leaving the UK reliant on a patchwork of existing regulations, voluntary commitments, and sector-specific guidance.

For businesses, the practical takeaway is clear: prepare for EU AI Act compliance as the binding baseline whilst building governance frameworks that satisfy UK requirements. The UK's lighter-touch approach offers flexibility but not exemption—the ICO, FCA, and MHRA are actively applying existing powers to AI.

The copyright reset is the most consequential policy shift underway. The government's admission that starting with a preferred opt-out model was "a mistake," combined with the March 2026 statutory deadline, means this area will see the most substantive policy development in the near term.

The workforce dimension adds urgency: with 70% of UK jobs exposed to AI disruption and early evidence of employment displacement, the race between AI adoption and worker readiness will shape the political appetite for regulation in the years ahead. Businesses that invest now in responsible AI governance—transparent policies, human oversight, proper data foundations—will be better positioned regardless of which direction policy takes.

Frequently Asked Questions

Does the UK have AI-specific legislation?

No. As of February 2026, the UK has no dedicated AI legislation. The government has indicated a bill is unlikely before late 2026 at the earliest, and any passed legislation would not have practical effect until late 2026 or 2027. Businesses must rely on existing laws (data protection, equality, consumer protection) and sector-specific guidance.

Do UK businesses need to comply with the EU AI Act?

Yes, if your AI systems' outputs are used in the EU. The EU AI Act has extraterritorial reach—it applies to any provider or deployer placing AI systems on the EU market or whose systems affect people in the EU, regardless of where the company is based. Most UK businesses serving EU customers will need to comply with both UK guidance and EU requirements.

What is the most important upcoming deadline for UK AI policy?

18 March 2026 is the critical near-term date. The government must publish its statutory economic impact assessment and report on AI and copyright, which will set the direction for transparency legislation and AI training rules. For EU compliance, 2 August 2026 brings high-risk AI system obligations into force.

How should UK businesses prepare for AI regulation?

Create a comprehensive inventory of all AI systems you use or deploy. Implement board-level AI governance with clear accountability. Establish meaningful human oversight for automated decisions. Ensure your data governance foundations are solid—42% of UK companies abandoned AI initiatives in 2025 due to poor data preparation. Default to EU AI Act compliance as your baseline standard.

What certification standards exist for AI governance?

ISO/IEC 42001:2023 is the primary certifiable AI management system standard. BSI was the first body accredited to certify against it. For UK businesses operating in both UK and EU markets, ISO 42001 provides a practical framework for meeting EU AI Act requirements in a documented, auditable way.

References & Further Reading

- UK Government. AI Opportunities Action Plan. Published 13 January 2025.

- UK Government. AI Opportunities Action Plan: One Year On. Published 29 January 2026.

- UK AI Opportunities Action Plan Dashboard. delivery.ai.gov.uk. Progress tracker.

- European Commission. EU AI Act Regulatory Framework. Implementation timeline and guidance.

- EU Artificial Intelligence Act. Implementation Timeline. Key dates and obligations tracker.

- Clifford Chance. Unpacking the UK's AI Action Plan. Legal analysis, January 2025.

- Hogan Lovells. AI Opportunities Action Plan: A Critical Review. Legal perspective, January 2025.

- Reed Smith. The UK Government's AI Growth Agenda in 2025. April 2025.

Related Articles

Marketing AI Automation: 2026 UK Guide

What AI automation delivers, what it costs, and how to implement it without falling foul of UK data protection.

What Is an AI Ethics Consultant?

How AI ethics consultants enhance responsible AI practices and boost business performance through strategic guidance.

GDPR for Marketers

Why GDPR matters for marketers and what you can do to stay compliant with data protection requirements.